How We Automatically Moderate Posts in Muzz Social at Scale

Background

Muzz Social is the world’s leading Muslim-focused social network. We allow people to share and connect with Muslims around the world.

Muzz Social sends push notifications for posts to users who haven’t been on the platform for 48 hours. These notifications are generated by selecting the most engaging and relevant posts, helping to re-engage users and surface content they might have missed. This feature has proven effective in delivering posts that matter to users.

However, we were concerned that some promoted posts might be harmful. A traditional content moderation system based on banned words would struggle to catch such content.

Our goal was to build a system capable of classifying text at massive scale, as manual moderation was not a scalable option. Currently, Muzz Social has around 850,000 posts, with tens of thousands of new posts and comments added daily.

Experimentation

To evaluate different moderation systems, we built a test dataset of real posts that we had manually identified as harmful or borderline. This gave us a consistent benchmark to compare the accuracy and behavior of various moderation tools under realistic conditions.

We initially experimented with simple sentiment analysis (positive vs. negative), but it performed poorly. It failed to flag posts with neutral sentiment that still contained highly hateful content, and it struggled with more nuanced forms of harm. We then explored hate-speech detection tools from Amazon Web Services (AWS) and Google Cloud Platform (GCP). These performed better and allowed us to label and classify content more effectively, giving us valuable data.

However, during our testing, we found that these systems exhibited institutional bias. Posts containing Islam-specific terms like “Islam” or “Allah” were often misclassified, reflecting a broader issue in the tech industry.

After further evaluation of tools from OpenAI and Anthropic, we turned to large language models (LLMs), which are better suited for handling nuance and allow for extensive customization. Additionally experimentally they performed excellently on our test data.

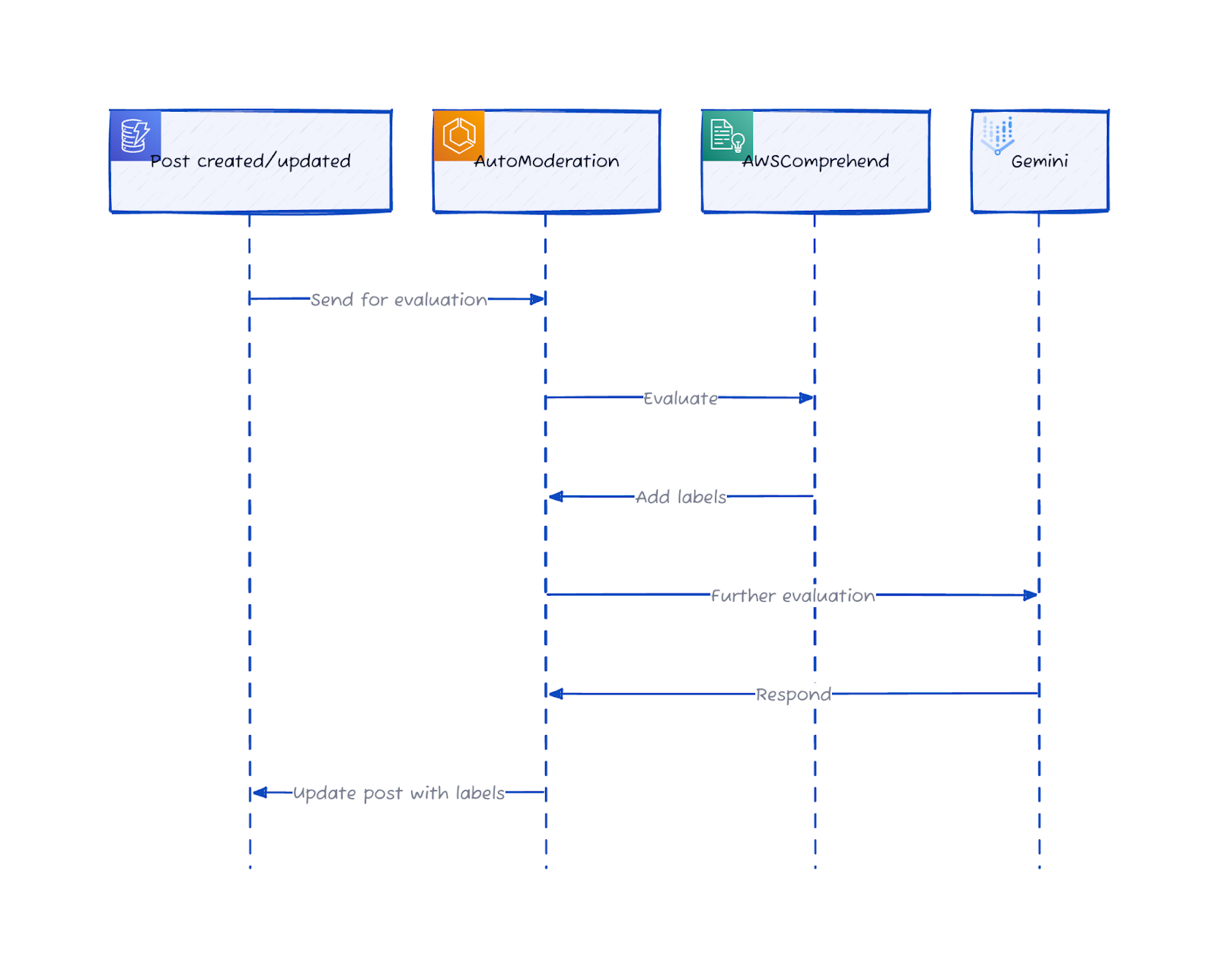

In the end, we chose Google’s Gemini (an LLM) as it best met our needs in terms of cost, functionality, and most importantly performance. Additionally, we integrated AWS Comprehend as a first-pass filter. If a post was obviously harmful, it would be labeled and marked as not safe for trending. Posts that passed this initial filter were then analyzed by Gemini. This approach helped us reduce the number of calls made to Gemini while maintaining a high standard of moderation.

Implementation

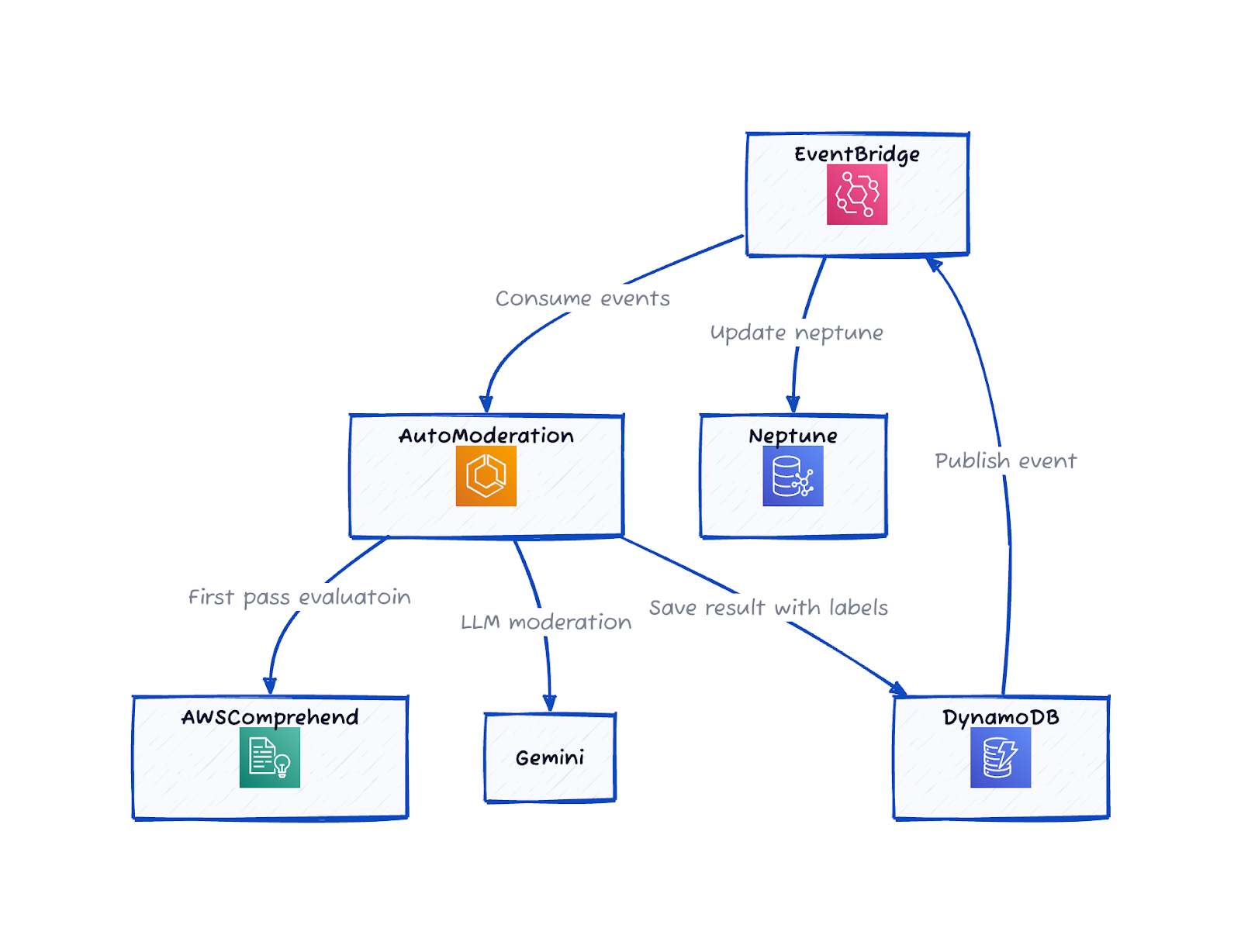

From an engineering perspective, to achieve this, we created a new service dedicated to text moderation. The reasoning was twofold: other parts of Muzz might also need this service, and having it as a standalone service makes it easier to monitor.

At Muzz, we use an event-driven architecture (via AWS EventBridge). This made the integration straightforward; we simply created a new event rule that triggers when posts are created or updated. These events are placed in a queue, which the auto-moderation service consumes. This setup provides automatic retries and allows the system to scale dynamically as the queue grows or shrinks.

After each post is evaluated, we update it with a marker that indicates whether it’s safe to be included in trending notifications. We then update our DynamoDB database, which serves as our source of truth. Once the DynamoDB record is updated, it triggers a corresponding update in our Graph database, which we use to fetch trending posts.

Crucially, we assume all posts are not suitable by default and only mark them as safe once the auto-moderation confirms they are. This prevents failures or null values from allowing potentially harmful content to slip through.

Overall, we’re extremely happy with the LLM-based solution. In just a few months, it has moderated over 80,000 posts. We’ve reviewed these posts and observed a noticeable increase in feed sessions since implementation, along with a significant improvement in the quality of posts shown to users.

We’re also exploring additional ways to integrate LLMs more deeply into Muzz.